Bluetooth Audio

Bluetooth History

Bluetooth Classic – BR/EDR

The first “incarnation” of Bluetooth – from around 1999 – is known today as Bluetooth Classic. Obviously it wasn’t “classic” at first. This wireless standard was basically a substitute for a wire between two user-selected devices – like a PC and a mouse.

The Bluetooth communication was (and still is) performed in the “unlicensed” 2.4 GHz band. “Unlicensed” means that it is free to use by anyone without paying license – although there are plenty of standards on how. The original raw data-rate was 1 Mbps – aka – BR – Basic Rate. Later a 2 Mbps version came – it was called ER – Extended Rate. When documents refer to BR/EDR, they basically means Bluetooth Classic. Being a wire-replacement means that participants in the communication is not changed often.

Hands-Free Profile

To setup various parameters, Bluetooth use “Profiles”. The HPF – Hands-Free Profile was used to setup hands-free phone conversations in cars. HPF originally only supported Mono, and was used in cars like Audi from around 2002. Later it caught on in trucks in e.g., “BlueParrot” solutions. This is still Bluetooth Classic – and still a user-selected wire-replacement.

Advanced Audio Distribution Profile

Around 2006 the Advanced Audio Distribution Profile (A2DP) was introduced. A2DP allowed for HiFi – using the SBC Codec. A codec is a “Coder” on the transmitting side, paired with a “Decoder” on the receiving side that together allows data to be compressed during airtime. You probably know MPEG and MP3, which are codecs used in respectively TV and music. In these cases data is also compressed when stored.

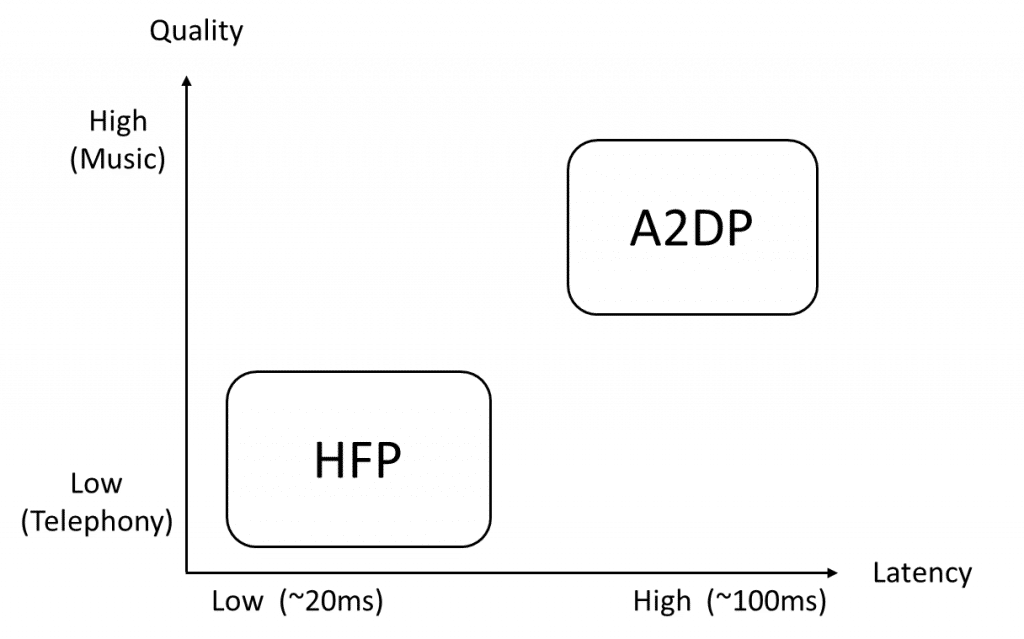

As the figure below shows, the A2DP meant a latency of ~100 ms. Not a huge problem when listening to music from a smart-phone – which soon became one of the most popular uses – and still is.

True Wireless

Note that we are still talking Classic Bluetooth – BR/EDR. This means that there basically is still only a single point-to-point connection. This works fine in Mono. It also works fine in Stereo – as long as you use classic headphones where you can have the Bluetooth radio on e.g., the left side of the head, and connect to the right side via the band over the head.

As we all know, “True Wireless” devices surged the market. These use all sorts of customized solutions on top of the Classic Bluetooth to support two detached devices. Thus the technology varies greatly from vendor to vendor.

Bluetooth Low Energy – aka Bluetooth Smart

With Bluetooth 4.0 – around 2010 – the world got the second incarnation of Bluetooth – Bluetooth Low Energy – also marketed as Bluetooth Smart. This is a major change from Bluetooth Classic – so much that it might have been called something completely different. Devices could now be “single mode” – supporting either Bluetooth Classic (BR/EDR) or Bluetooth Low Energy (LE). They could also be “dual mode” – supporting both. In reality most devices are probably single mode, since the use cases are so different. As stated, Bluetooth Classic is a wire replacement where the “wire” is manually “moved” by the user-application to connect to a new device – e.g., when you leave one car and go into another. Bluetooth Low Energy, on the other hand, is a packet-based network where devices address packets to different devices all the time.

The main target for BLE is IoT, where many very small devices (peripherals) can deliver information when relevant (polled by the central device as well as broadcasted by peripherals). Messages are short and rare, and many tricks are performed to keep the power down in the peripherals, so that they may run long on small button-cells. This was a huge step closer to the “Intelligent Home” and other IoT-solutions – but had way to little bandwidth for audio.

Note that to become IoT-relevant, a bridge is needed between the internet and BLE. Where WiFi uses the TCP/IP stack like the internet – making it relatively easy to integrate into IoT – BLE is not so easy to integrate, and therefore mostly used in “Personal Area Networks” – with e.g. a phone as the Central device. In this case the phone can also be a bridge to the internet.

BLE Audio and Auracast

As stated above, BLE offered a very low bandwidth that did not make it suitable for audio. However, it had some very interesting capabilities that made it hard to ignore, when you are dealing with audio. Packet-based networks allows for addressing individual ear-pods and hearing aids – as well as for broadcasting. We already had these features with Wi-Fi. However, Wi-Fi has developed towards fantastic transfer-speeds – but uses way too much energy. A small earbud cannot supply Wi-Fi for long – let alone hearing-aids that are smaller and used for longer duration. BLE however, is inherently low-power. So was there a way to combine all these interesting capabilities?

As it turned out – there was. With Bluetooth 5.2 – around 2020 – we got BLE Audio. This is a huge step on top of BLE – but it still obeys the basic “laws of BLE”, so I don’t think we can call it a 3’rd incarnation of Bluetooth – but it’s close.

Bluetooth 5.2 allows:

- Separate synchronized signals for many channels – not just stereo, but surround sound and even more (up to 31 channels).

- Encrypted connection between e.g., a phone and a connected set of devices (std. BLE).

- Broadcasts – encrypted or not – between a “broadcaster” and an infinite number of listeners. This completely allows for silent discos and sports-bars, as well as churches, museums, airport & bus-station announcements etc. Different languages may be broadcasted for the user to select the preferred version. This broadcast technology is marketed as Auracast.

- Low-latency compared to e.g., the SBC codec. This is important to avoid annoying the users with the direct airborne sound – from e.g. a TV – coming into the ear, much earlier than the Bluetooth Signal.

The rest of this page deals with the key technologies behind BLE Audio

Main BLE Audio Building stones

The Bluetooth “core spec” is now more than 3000 pages. We cannot in any way include all content here, but I will try to give an overview of the pieces most important to audio handling – new and old.

Bluetooth Physical Layer

One of the most defining characteristics of all Bluetooth variants is Frequency Hopping. Where e.g., Wi-Fi is preset to a specific band (channel), Bluetooth jumps between channels in a semi-random scheme. The idea is that an adaptive Channel Map can be updated dynamically, so that frequencies that are undisturbed are used more than the ones where there is noise – e.g., from Wi-Fi. This is quite advanced and creates some tough requirements on the radio that needs to quickly turn to new frequencies all the time, and the scheduler in each device that decides when frequencies are changed. To help devices find each other at the right time and at the right channels, three specific channels are used to advertise this info as broadcasts.

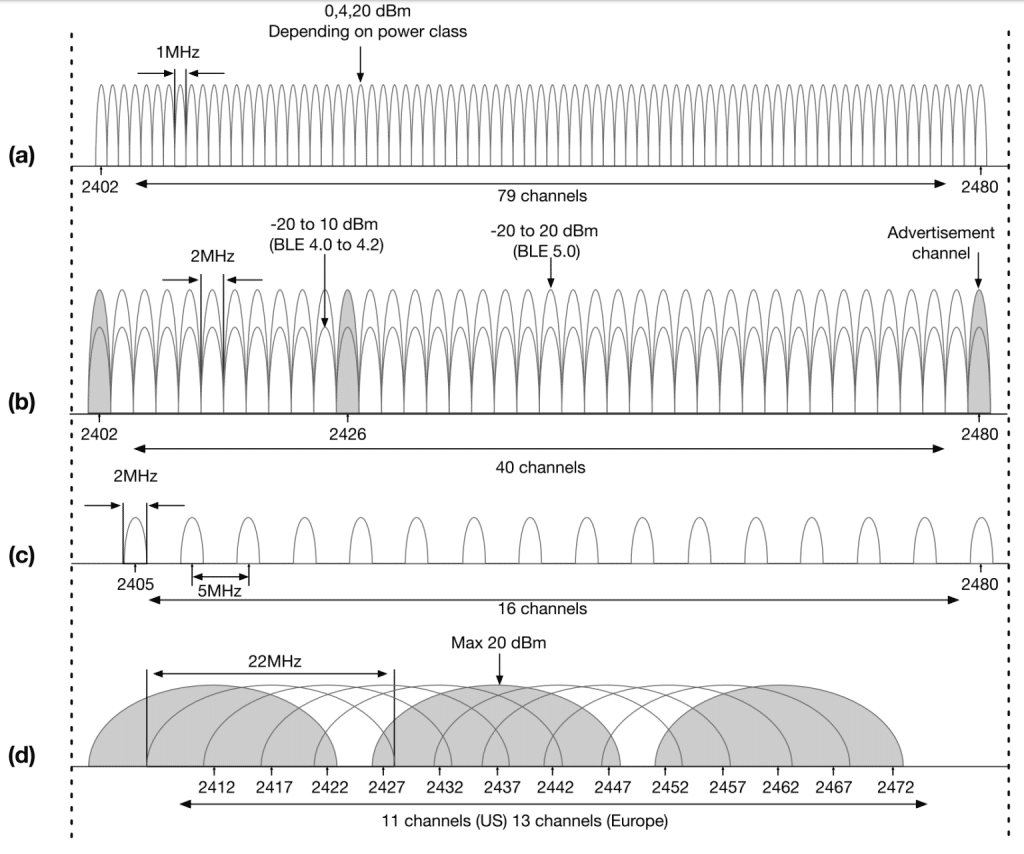

The figure below shows the following:

a) Bluetooth Classic with 79 channels – each 1 MHz wide. No advertisements are used here, as it is basically a point-to-point stream, set-up at application level. Basic Rate (BR) = 1 Mbps. Extended Data Rate (EDR) = 2 or 3 Mbps.

b) BLE with 40 channels – each 2 MHz wide. Three advertisement channels fit the spacing in an ideal Wi-Fi setup – see graph d). This helps keep the advertisement channels free from noise – although here are no guarantees. LE = 1 Mbps – later 2 Mbps – is using less power than BR/EDR due to different physical coding of symbols. Note that a higher Tx power is possible with BLE 5.0 – but not allowed in EU.

c) Zigbee – not discussed here

d) 2.4 GHz Wi-Fi supporting 11 or 13 channels, but – only 3 simultaneously – if you use channels 1, 6 & 11. The wider channels here allow for much higher data-rates (using more power).

As stated, the advertisement channels were invented with BLE. The Classic Bluetooth did not need advertisements – being a wire replacement, the parties were already connected.

Note that each combination of symbol-encoding and bit-rate, constitutes a new PHY. Each PHY is certified according to international regulations. This means that if you e.g., use 1 Mbps for the three advertising channels and 2 Mbps for the other 37 channels, then the 1 Mbps PHY is NOT considered to use frequency hopping, as there are only three channels. This makes certification challenging.

BLE Audio upper layers

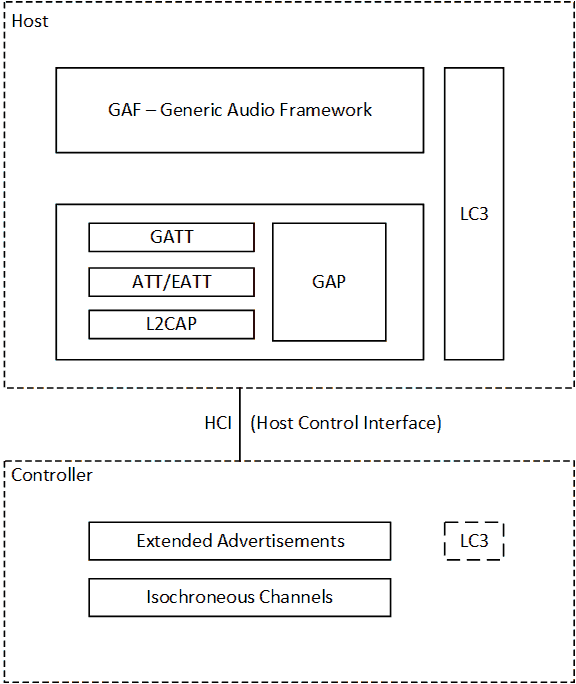

The figure above shows the most important architectural building blocks for handling BLE Audio. The following came with BLE – in Bluetooth 4.0:

- Host

The host is implemented in software- just below the application layer. It is not time-critical – it administrates the configuration via profiles that can be seen as client-processes. You can say that the Host defines WHAT is communicated. - Controller

The controller is often realized in dedicated hardware/firmware – like a USB-dongle. It controls the radio and implements the time-critical scheduler. It keeps arrays of information on devices based on their state and characteristics and may be polled by – or notify – the host. You can say that the Controller defines WHEN we communicate. - HCI

The Host-Controller Interface is often a hardware bus – SPI or USB – but it does not have to be. Host and Controller could be realized on the same single microcontroller – like in many Nordic devices. The commands here are standardized and many can be tested from the command-line by e.g., hcitool. This is possible because all the time-critical stuff happens on the controller. See my page on Zephyr. - GATT

Generic Attribute Profile. Defines services, characteristics and descriptors. Can be seen as an access to the device’s database. - ATT

The Attribute Protocol is used by the GATT to deal with the attributes that are exposed by services. - GAP

Generic Access Profile. Defines overall modes, advertising schemes and security. A good place to start reading. - L2CAP

Logical Link Control and Adaptation Layer Protocol is an advanced link layer. L2CAP handles encapsulation, scheduling, segmentation/reassembly and retransmissions.

The following are the parts that was added with Bluetooth 5.2 – giving us BLE Audio:

- GAF

The Generic Audio Framework is a piece of middleware that governs profiles and services – allowing multiple applications above it, parallel use of the layers below. We will not go much in detail with this here. - Extended Advertising

Describes new advertising schemes designed for information stands and BLE audio. We will dig more into this – especially the subset Periodic Advertisements. - EATT

From the core spec: Extended Attributes – EATT – supports concurrent transactions, allows the interleaving of L2CAP packets relating to ATT packets from different applications, and allows the ATT Maximum Transmission Unit (MTU) to be changed during a connection. This is not directly relevant to audio processing, although it improves parallel handling – and thereby latency. It also improves flow control and security. We will not dig deeper into this here. - Isochronous Channels

These are the new streams of audio-data that are synchronized between channels. Isochronous means that specific events are repeated at specific intervals, and that time-differences are integer multiples of a common base. This is the main protocol vehicle for audio processing and we will look further into Isochronous Channels. - LC3

Low Complexity Communication Codec. This is a fantastic codec that allows music and speech to be highly compressed – keeping a high quality. It is typically implemented in software in the host – but could also be delegated to the controller for firmware implementation. We will study LC3 a bit more in the following.

CIS, BIS and Isochronous Channels

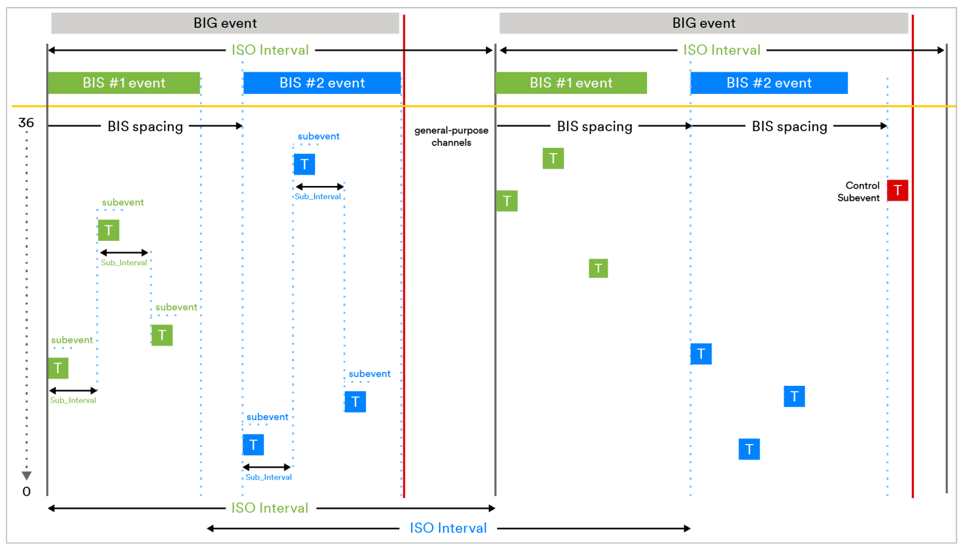

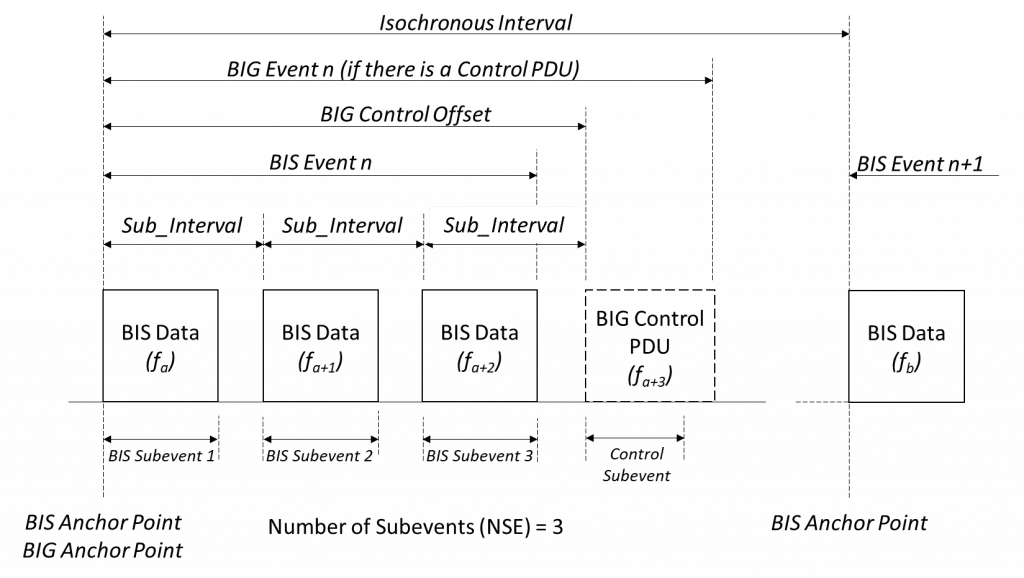

Broadcasts (BIS)

Bluetooth Low Energy already had the concept of broadcasts before BLE Audio. It was known as “advertisements”. As you may remember, these only took place in 3 of the 40 channels, and the same data is typically repeated in all three channels. Thus the bandwidth is pretty low. The new Isochronous Channels however, allow for broadcasts with much higher payload. Broadcasts are also known as 1: N (transmitters : receivers). Broadcasts are never “connected” – the transmitting device does not know about it’s listeners – just think about radio or “flow-TV”.

- A BIG – Broadcast Isochronous Group – may contain one or more Broadcast Isochronous Streams – BIS.

- ”Broadcasting” means: NO HANDSHAKES.

- The ISO interval is 10 ms in most scenarios – but can be longer. 10 ms corresponds to a typical LC3-frame (7.5 ms is also possible for LC3).

- Multiple sub-events in one ISO-interval is possible (and normal).

- Each sub-event is transmitted at a new channel – see figure above,

- Each subevent can contain 10 ms worth of audio (sampling slower than Tx & compression on top).

- Tx-time @ 2 Mbps and e.g. 60 bytes LC3 corresponds to ~300 us.

- Subevents can be different audio-channels: Left/Right/Back Left/Back Right/Center/Mono. They can also be retransmissions of these.

- We can only ”render” (start playing ) after last retransmit (see LC3).

- We can allow retransmissions in e.g., next ISO interval – this improves the chances of delivery, but, unfortunately, adds 10 ms to latency.

- Note that since there are no handshakes, the transmitter does not know who received data – and who did not. Thus there will always be the stated number of retransmissions.

- The missing handshake is a challenge when and if we want encrypted broadcasts. The encryption-key in this encryption is known as a Broadcast Code. It will have to be given to the listeners “out of band”. This means that it may be manually typed in, read as QR-code, NFC or what have you. However, in many cases it does not make sense to encrypt broadcasts.

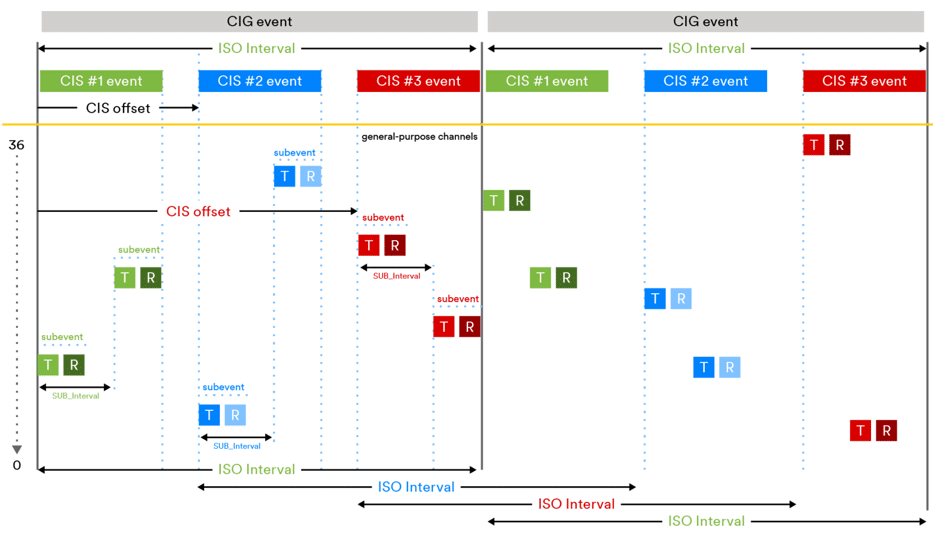

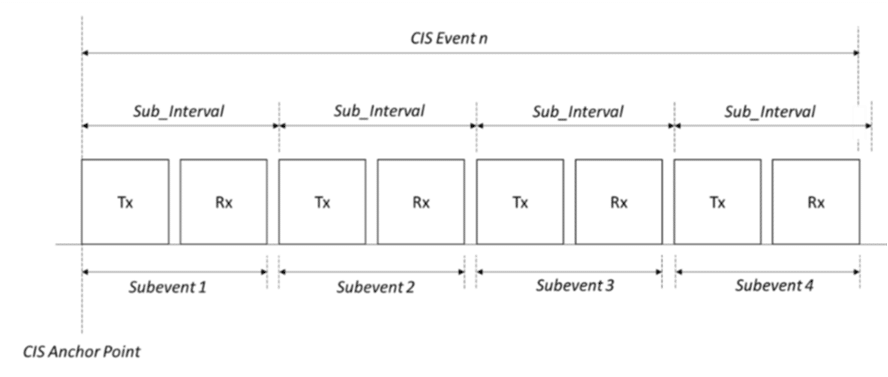

Unicasts (CIS)

Unicasts are 1:1 and is also called “connected”. Here the two parties know each other. Think conversation or music via phone.

- A CIG – Connected Isochronous Group – may contain one or more Connected Isochronous Streams – CIS.

- ”Connected” means: YES, HANDSHAKES.

- The ”ISO interval” is typically 10 ms – this corresponds to the LC3-frame. Like BIG.

- Like BIG, subevents may represent different sound-channels and/or retransmissions

- As with broadcasts, we may have retransmissions, but now we rely on ACK/NACK from receiving side – in the same subevent as the transmission.

- When a transmission is considered a success, no more retransmissions are sent – power and spectrum is thus saved. Still – we cannot render until last configured retransmit would have taken place (see LC3).

The figure above shows a CIG with CIS subevents. Note that data (including acknowledge-type info) can go in both directions now.

LC3

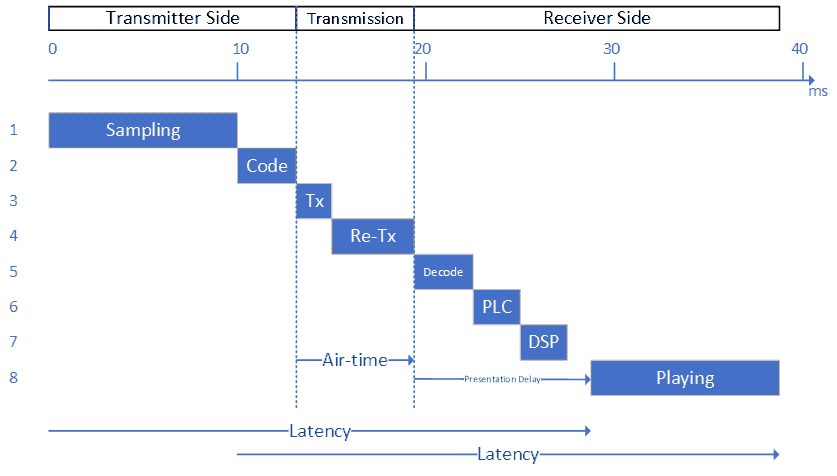

The figure below shows the timing in a Bluetooth Audio system. On the left side we have the transmitter – on the right the receiver. On top we have a timeline. In the example, the latency – the time from a sample comes in at the left, until it comes out on the right – is close to 30 ms.

The elements in the figure above are:

- Sampling

The transmitter samples a block of data in a buffer. With LC3, we cannot start next step until we have the last sample in the buffer. When LC3 is used, this sampling-period is typically 10 ms. It can also be 7.5 ms. - Code

This is where we do the “code” (coding) part of the codec. LC3 and other modern codecs use FFT – and a lot of other clever algorithms. The FFT needs a full block of data to operate on – which is why we need to wait the full 10 ms in step 1. As the FFT use slightly overlapping blocks, the algorithmic delay is not 10 ms but 12.5 ms. This is dictated by the physics & math – throwing in a bigger CPU will not improve the algorithmic delay – but it may improve on any further delays in the “Code” block. - Transmit

Data is transmitted. Since data is compressed, and the bit-rate here is much higher than that of the sampling system, this is a relatively short time. - Re-Transmit

Bluetooth allows for retransmissions. They come with a latency penalty. If the parties have agreed on e.g., (up to) 3 retransmissions, the receiver cannot start playing sound when it has the first successful receival. The previous block – or the next – may need all retransmissions, and as the samples must come out evenly spread, the receiver will always await the last retransmission’s end-time. In a 1:1 (connected) scenario there is a handshake, and retransmissions can be spared once we have a successful transmission. This allows the parties to save on their battery and saves the spectrum from unnecessary “noise” – but we still have to wait. - Decode

This is the decode part of the codec. It is as fast as the CPU allows. - PLC

This is “Packet-Loss Concealment”. If the receiver hasn’t received a specific block of data at the time of the last agreed retransmission, it needs to give up. Now it will try to make sure that whatever comes out of the speaker, sounds as much as possible as what can be expected. Not an easy task. - DSP

This is “Digital Signal Processing”. It may be filtering or other more advanced stuff. Obviously the codec, PLC and transmission also use DSP. This block here is, however, application dependent. - Playing

Finally, the audio comes out. This takes exactly as long as the sampling.

A device may be programmed to a given Presentation Delay. Just like we need to await the time for the last retransmission, we also need to wait for other slower speakers. If e.g., a hearing aid is mounted in the left ear, and a consumer earbud is mounted in the right, they will be configured for a Presentation Delay that the slowest device can handle.

Extended and Periodic Advertising

As described earlier, advertisements were introduced with the packet-coupled BLE, in order to allow different peripherals – typically suppliers of data – and centrals – typically consumers of data – to find each other and connect. This advertising always took place in channels 37, 38 and 39. Note that the timing of these original advertisements include a pseudo-random element. This is to help the packet to – eventually – come out of the “shadow” from other broadcasters. The concept works as designed, but also means that you may need to look for advertisers for a long time before you have found them all.

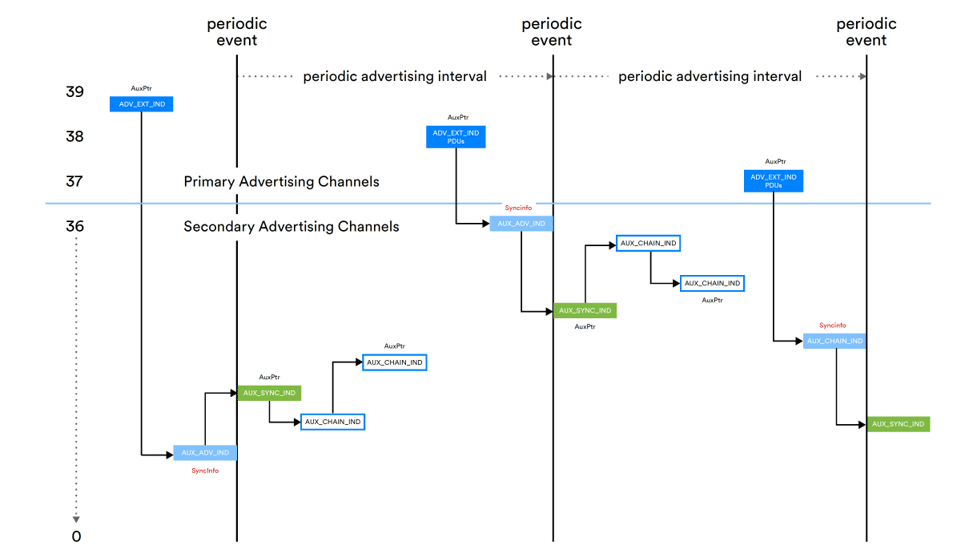

Later (Bluetooth 5.0), Extended Advertisements were introduced. This was further extended into Periodic Advertisements with Bluetooth 5.2. Now the usual data-channels – 0-36 – are also used, and the timing is fully deterministic. This is necessary to convey all the information that a listener might need. The listener’s link-layer will need a lot of technical information about codec used, timing, retransmissions and much more. The figure below shows the periodic advertising.

We also want to broadcast meta-data about the content, pretty much like the Flow-TV pages, in newspapers and on web, that tells you which show runs on which channel – and when. If the sound is e.g., for a program in a sports-bar, you may even be able to select different human-language versions. This meta-data is passed on to the application level for the user to select.

Auracast Assistant

Now you may ask “how will a user be able to select anything on a headset – let alone a hearing aid?” This is were the Auracast assistant comes in. This can be an app on a phone, or a watch, presenting the meta-data to the user and allowing the user to make the selection. Newer phones and watches that are BLE 5.2 compatible, will be able to do the scanning. This means a most welcome power-saving on the small hearing aids/earbuds. When the phone is not compatible with BLE 5.2, our small devices will need to do the scanning themselves and via a standard BLE connection communicate back and forth with the phone or watch. Not optimal but doable.

More information

I have used drawings from Nick Hunn’s book: “Introducing Bluetooth LE Audio”. This recommended reading can be bought in hardback format, but can also be downloaded as a PDF from the Bluetooth SIG. At the same place you can also find the “Bluetooth LE primer”. Finally, you can also download “Bluetooth regulatory aspects” here. This is also very recommendable – and not as dry as you might expect.